The graph neural networks are trending because of their applications in a variety of predictive analytics tasks. When it comes to modelling the data available with graphical representations, graph neural networks outperform other machine learning or deep learning algorithms. In the field of natural language processing as well, graph neural networks are being applied in a full swing because of their capabilities to model complex text representations. In this article, we will discuss one such interesting application of graph neural networks, i.e., in text classification. First, we will understand how this framework works to model the text representations and then we will explore how it can be used for text classification. The major points that we will discuss in this article are listed below.

Table of Contents

- Deep learning in text classification

- Graph Neural Networks

- Graph Convolutional Network

- Text Graph Convolutional Networks (Text GCN)

- Implementation of Text GCN in Python

Deep learning in Text Classification

As we know that we can roughly divide the deep learning studies into two major models one is convolutional neural networks and another one is recurrent neural networks. If we talk about the text classification the studies here also can be divided into two groups where one is focused on the make model which can learn based on the word embedding. Three are various studies that have shown us that the success of any text classification model depends on the effectiveness of the word embeddings. Another group is focused on learning the document and the word embedding together.

If we talk about the models CNN and RNN both can be used for text classification. But the CNN is good with the one-dimensional convolutional and is majorly used in the computer vision field and a special type of RNN that is LSTM (long short term memory) models can be used for better performance in the text classification. The further extension of these models is done using mechanisms, like attention mechanisms which have increased the flexibility of text representation and can be used in the deep learning model as an integral part. Deep learning methods and their extensions are widely used. The major benefit of these models is they focus on the local consecutive word sequence for text classification, unlike traditional methods which use global co-occurrence information of the words for learning saved in a corpus.

Graph Neural Networks

In recent times the applications of graph neural networks are growing rapidly in multiple domains. There are a number of studies in which we can see that number neural networks such as CNN are generalized and can be applied to the regular grid structure which is helpful in working on arbitrarily structured graphs. There are multiple Graph Neural Networks in the field of text classification. Before going for any of the networks let us understand the graph neural networks first which will make a clearer picture of the network in front of us.

Graph

The graph is a data structure that consists of vertices and edges. It can be represented as the function of vertices and edges.

G = ( V , E )

The below image is a representation of the direct graph.

The type of the edges in the graph de[ends on the directional dependencies between the vertices. And the edges can be of two types: directed or undirected.

Method of Graph Neural Network

By the name, we can understand if a neural network operates on the graph we can call it a graph neural network where the major operation of any neural network is to classify the vertices or nodes. So that every node presented in the graph can be classified by their provided labels according to the neural network.

If there is a node v and its features can be characterized by the x_v at ground truth t_v given in a labelled graph G so we can label an unlabeled graph using this labelled graph where a d dimensional vector helps in learning and h-v contains the information of its neighbourhood. Mathematically

If any reader wants to learn more about graphical neural networks can check this paper.

As we have discussed before in the deep learning section, we can perform text classification using CNN or RNN. and also how LSTM is good for text classification we can check on this article. Also in graphical neural networks, we can either use a graphical recurrent network or a graphical convolutional network. Since in this article we are going to talk about a model which is basically a graphical convolutional network so we will start with the graphical convolution network and then we will see how we can use it.

Graph Convolutional Network

We can say if a convolutional neural network is directly used with the graph for operating and making predictions we can call it a graph convolutional network (GCN). more formally a convolutional neural network inducing the embedding vectors of nodes which are dependent on the property of the neighbourhood.

Let’s say a graph as:

G = (V, E),

Where,

V (|V | = n) and E are sets of nodes and edges, respectively. Every node is assumed to be connected to itself, i.e.,(v, v) ∈ E for any v.

X is a matrix containing n x m nodes and their features

A is an adjacency matrix of G

D is the degree matrix of A.

So for mathematical a k-dimensional nodes feature matrix can be calculated as:

Where the convolutional network is one dimensional and,

Where the convolutional network is one dimensional. W0 ∈ Rm×k is a weight matrix p is an activation function like ReLU and j is number of layers and if j = 0 then L = X

Text Graph Convolutional Networks (Text GCN)

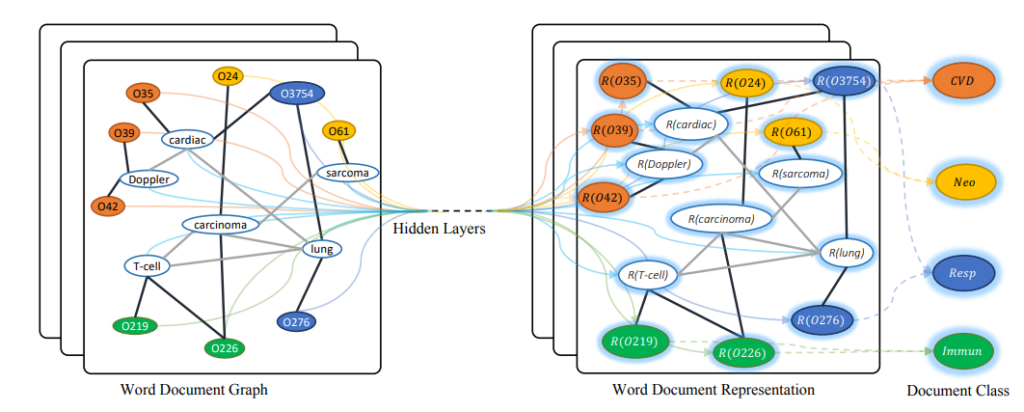

From the above, we have seen how the traditional and deep learning model works on the text documents and here the graph neural network or graph convolutional network(in our case) is expected to make a text graph heterogeneous which can model global co-occurrence sequence of words. The heterogeneous text graph contains the nodes and the vertices of the graph.

Text GCN is a model which allows us to use a graph neural network for text classification where the type of network is convolutional. The below figure is a representation of the adaptation of convolutional graphs using the Text GCN. See Also

How RMSI Cropalytics Leverages AI To Predict Crop Yield

.

Where the addition of the corpus size and the number of unique words is equal to the number of nodes on the text graphs.

The model in Text GCN takes the input in the form of an identity matrix so that every word can be represented as the one-hot vector. To generate the TF-IDF (term frequency-inverse document frequency) of the word in the document the model generates the edges among nodes based on the word occurrence in the global corpus. Like in the traditional way in TF-IDF the term frequency represents the number of occurrences of the word in the document.to gather the co-occurrence statistics the model supplies a fixed size window on the documents in the corpus and the sliding of the window makes the global word co-occurrence information useful for prediction and classification.

Mathematically the weight of an edge between node i and node j is defined as

Here, W represents the total sliding window and W(i) is the number of sliding windows that slide on the corpus of i words. Positive pointwise mutual information represents the high correlation between the words in a corpus. This is how the relationship between the words in the corpus is used for making a text graph.

Now, this text graph can be fed into the GCN. In the Text GCN, the model which will operate on the text graph has two layers. The final layer has the same embedding which is labelled and can be fed into the softmax classifier.

Where

The two-layered GCN is better performing than the one layered GCN and also it allows using two-layered GCN to pass information among the nodes that are a maximum of two words away from each other. Which is also used to make a graph without direct document-document edges.

When we talk about the modeling procedure the Text GCN model works on these three major steps.

- Preparation of the data – In this the repository of the model contains functions that can automatically perform most of the basic tasks of the NLP modeling procedures like cleaning data, removing stopwords, etc.

- Preparation of text graph – Using the cleaned document, inbuilt functions in the repository helps in making the graph in a similar way which we have discussed earlier in this topic. The generation of the graphs is dependent on the PMI which tells about the semantic correlation in the corpus.

- Training of the model – In this step the main model for which the text graphs are prepared to get trained on the graphs. This model is basically a two-layered convolutional network that is tuned to perform on the graph structure data.

For more information about the model Text GCN, a reader can go to this github repository. In the repository, we can get the full guidance to use the model with python, and also there are tutorials available for data preparation for the model.

Final Words

In the article, we have seen how deep learning works on text classification problems and after that how the extension of deep learning can approach text classification. In many domains, the graph neural networks are working fine and can also be used for NLP modelling. There are some of the graph neural networks available for text classification. The Text GCN model is one of them that can be used for text classification that we tried to understand in this article.